Clouds come in flavors. Amazon Web Services (AWS) is different from Microsoft, which is different from VMware on AWS, which is different from VMware vCloud Director-based clouds. There are dozens of different cloud platforms available, and thousands of cloud providers. Navigating this increasingly crowded space is part of a modern systems administrator's job, and software plays an increasingly important role in making sense of it all.

One problem posed by multiple clouds is the concept of "t-shirt sizes." It’s common for public cloud providers to offer workload instances with a fixed combination of resources: RAM, virtual CPUs, and storage. These pre-canned instances are usually named similar to t-shirts: small, medium, large, and so forth.

A small instance on one cloud provider is likely to be slightly different from a small instance on another cloud provider. At a large enough scale, where workloads are designed to scale up as needed (and scale down when possible), the differences in sizing don't really matter. Most organizations, however, don't play at that scale, or with cloud-native workloads. For us mere mortals, the differences in instance sizes between cloud providers matters, and it makes resource management matter.

Beyond Resources

The differences between cloud providers transcend differences in resource allocation. The larger public cloud providers offer more Infrastructure-as-a-Service (IaaS) as more than just virtual machines (VMs) or containers. The major cloud providers offer additional infrastructure services, such as databases. They also offer Platform-as-a-Service (PaaS) and Software-as-a-Service (SaaS).

Administrators choosing to use the major public cloud providers are able to forgo building their own VMs or containers to host, for example, a website. Instead, they could consume a website PaaS, such as WordPress or Drupal. This is a convenient – and generally inexpensive – way to stand up a modest website; but one wouldn't be able to run Netflix off of AWS' WordPress-as-a-Service. At some point, workloads need their own VMs and/or containers.

Similarly, cloud providers differ in their approaches to security, networking, data protection, and more. Each cloud provider an organization is considering needs to have their approach to IT infrastructure analyzed and understood. That information can then be used to compare requirements for running a given workload on a specific cloud provider.

Which resources need to be allocated for a workload to operate within acceptable parameters? Are there special considerations for backing up that workload, making it resilient to outages, or making it able to scale to meet demand?

Most importantly, when all elements are fully considered, where is it most cost efficient to run that workload, and how does that choice affect the interaction of that workload with the rest of the organization's IT infrastructure, and/or any users consuming workload?

Call the Experts

Systems administrators have better things to do than keep on top of all the various cloud providers' individual quirks. New cloud providers are regularly entering the market, and the variables that might affect how efficiently a workload operates on a given cloud provider change frequently.

Since all organizations considering cloud computing face the same challenges, it makes little sense for each organization to replicate the work of gathering and analyzing this data. Increasingly, this task is being taken on by vendors. A vendor can dedicate a team to keeping track of the ins and outs of cloud computing, and share those insights with their entire customer base.

In one sense, this is no different from how channel partners have always acted. Managed Service Providers (MSPs), Systems Integrators (SIs) and other channel partners have for decades served customers as a sort of Expertise-as-a-Service. If the customer didn't have in-house storage specialists with time to keep up to date with all the latest storage arrays, they'd turn to a channel partner to find the right solution for their needs, and to keep that solution running.

Today, organizations are turning to cloud cost optimization specialists to get a handle on their cloud usage, especially where organizations are engaging multiple cloud partners. The difference between this and traditional channel partners is usually one of scale.

Software Required

Helping an organization source a half dozen storage arrays and choosing between a dozen or so storage vendors can be a project that takes months. Proof-of-concept testing, negotiation, and data migration are all part of the selection process for just one component in a data center.

Organizations turning to cloud computing are often looking at getting an understanding of the costs for every workload under management. Costs (and timeframes) are required at the level of individual workloads, collections of workloads acting as a service, as well as for a bulk migration of all an organization's IT.

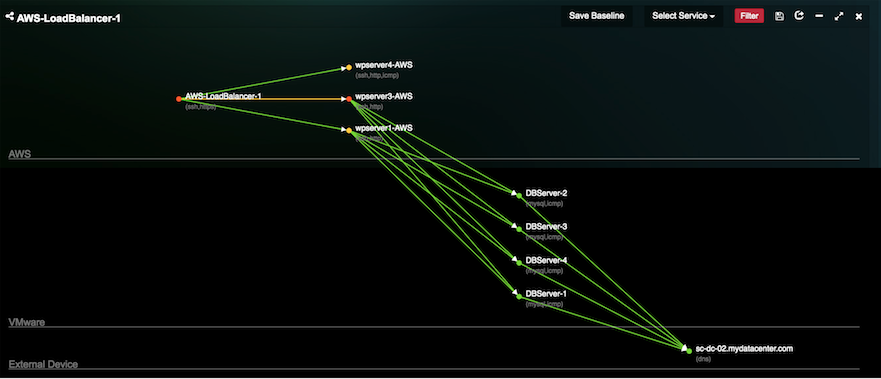

Figuring out costs requires understanding the workloads an organization currently has, as well as the workloads they plan to implement. This requires baselining workloads, understanding the interactions of those workloads with one another, as well as how those workloads interact with the infrastructure upon which they execute.

Attempting to collect all the data about an organization's workloads, about the various cloud providers available, and then analyzing it is an intimidating task for seasoned experts. Beyond a certain scale, even with experts it becomes impossible to do entirely manually.

Software is required to gather this data and make sense of it. The experts are best used helping to design the relevant software, and ensure that the data being input to the software is correct. Crunching the numbers is what computers are good at, and that's where software comes in.

Multi-Cloud Mania

Organizations tend to engage multiple cloud providers for various reasons: because they’re concerned about resiliency of their workloads against failure, they’re seeking wider geographic dispersal than any one provider can offer, or they’re looking to keep costs down by putting the right workload on the right provider’s cloud.

No special software is required to keep copies of workloads on multiple providers. Guarding against the failure of a single cloud provider isn't particularly difficult. Widely disbursing workloads across multiple cloud providers to obtain geographic coverage, however, often requires keeping an eye on regional usage, and optimizing workload sizing to maintain availability: individual regions in this scenario will often have much smaller workload sizes, but may experience rapid fluctuations in demand.

Engaging multiple providers for cost optimization is the most difficult undertaking. It typically requires real-time monitoring of all workloads, and storing that performance data for historical analysis. This analysis helps to inform predictions about future workload requirements. In turn, this drives the selection of a cloud provider, instance size, and any additional services (such as a content distribution network) that may be required.

Uila can help. Uila brings together data from all of an organization's workloads across all infrastructures, making multiple clouds as easy to use as one. For more details on Uila's Multi-Cloud monitoring and analytics solution, click here.

Subscribe

Latest Posts

- How Data Center System Administrators Are Evolving in today's world

- Microsoft NTLM: Tips for Discontinuation

- Understanding the Importance of Deep Packet Inspection in Application Dependency Mapping

- Polyfill.io supply chain attack: Detection & Protection

- Importance of Remote End-User Experience Monitoring

- Application and Infrastructure Challenges for Utility Companies

- Troubleshooting Exchange Server Issues in Data Centers

- Importance of Application Dependency Mapping for IT Asset Inventory Control

- Navigating the Flow: Understanding East-West Network Traffic

- The imperative of full-stack observability